maven安装

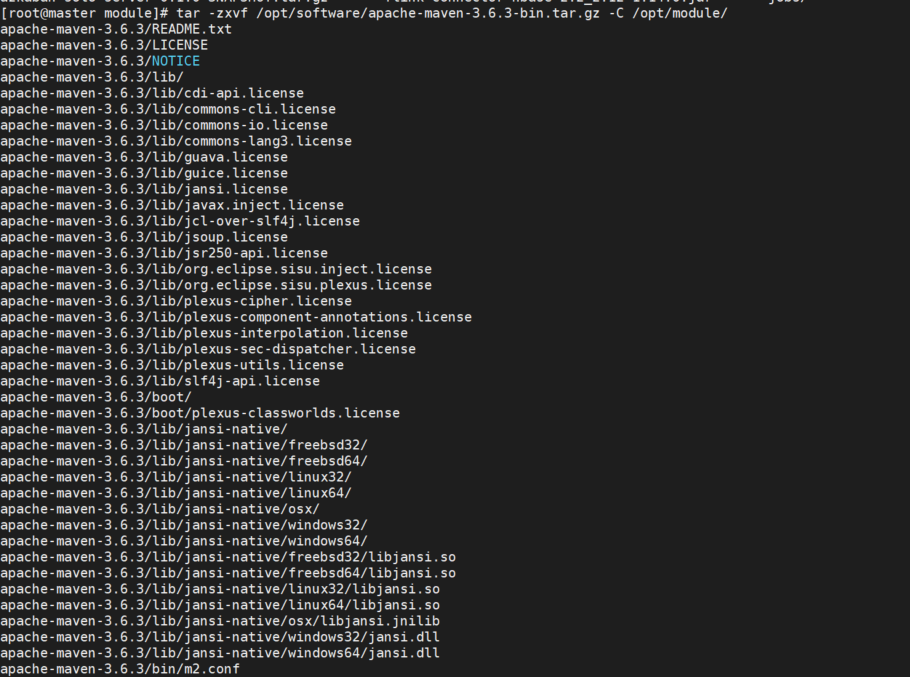

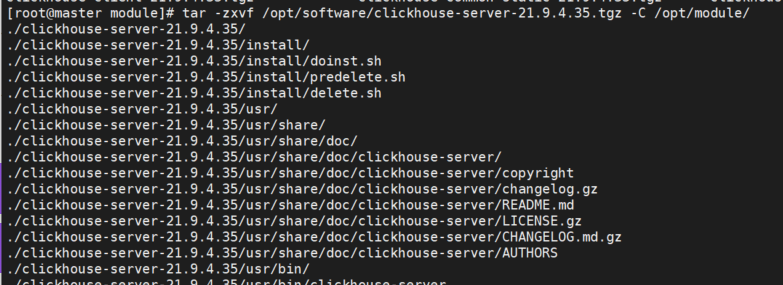

解压

解压maven压缩包

tar -zxvf /opt/software/hudi-0.12.0.src.tgz -C /opt/module/

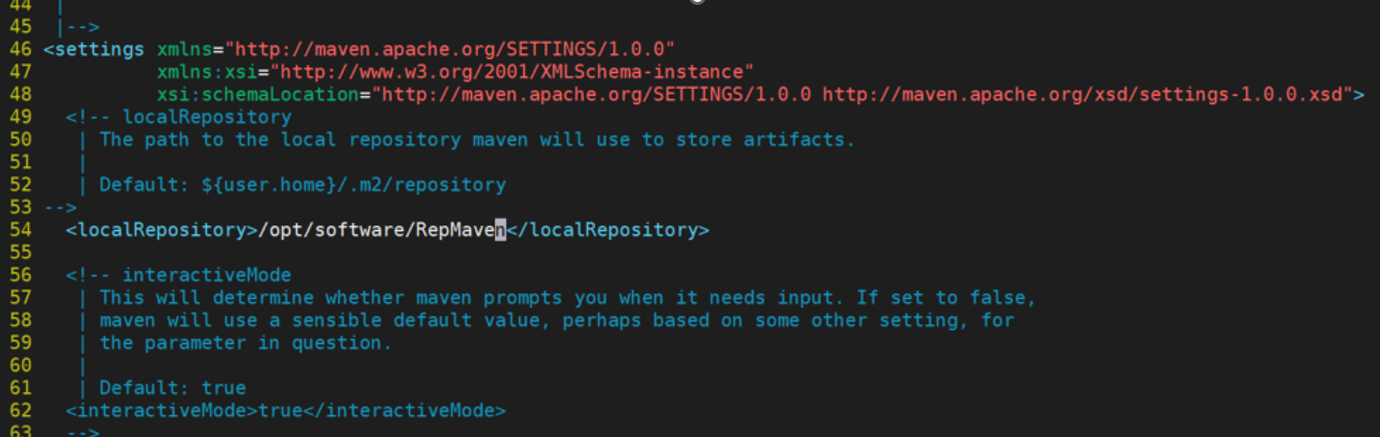

配置本地库

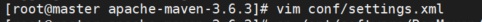

vim conf/settings.xml

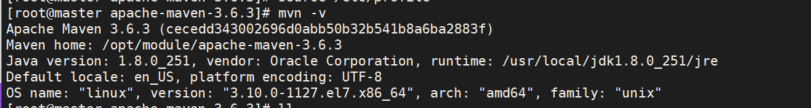

配置系统变量

vim /etc/profileexport MAVEN_HOME=/opt/module/apache-maven-3.6.3

export PATH=$PATH:$MAVEN_HOME/bin查看环境配置结果

hudi 安装

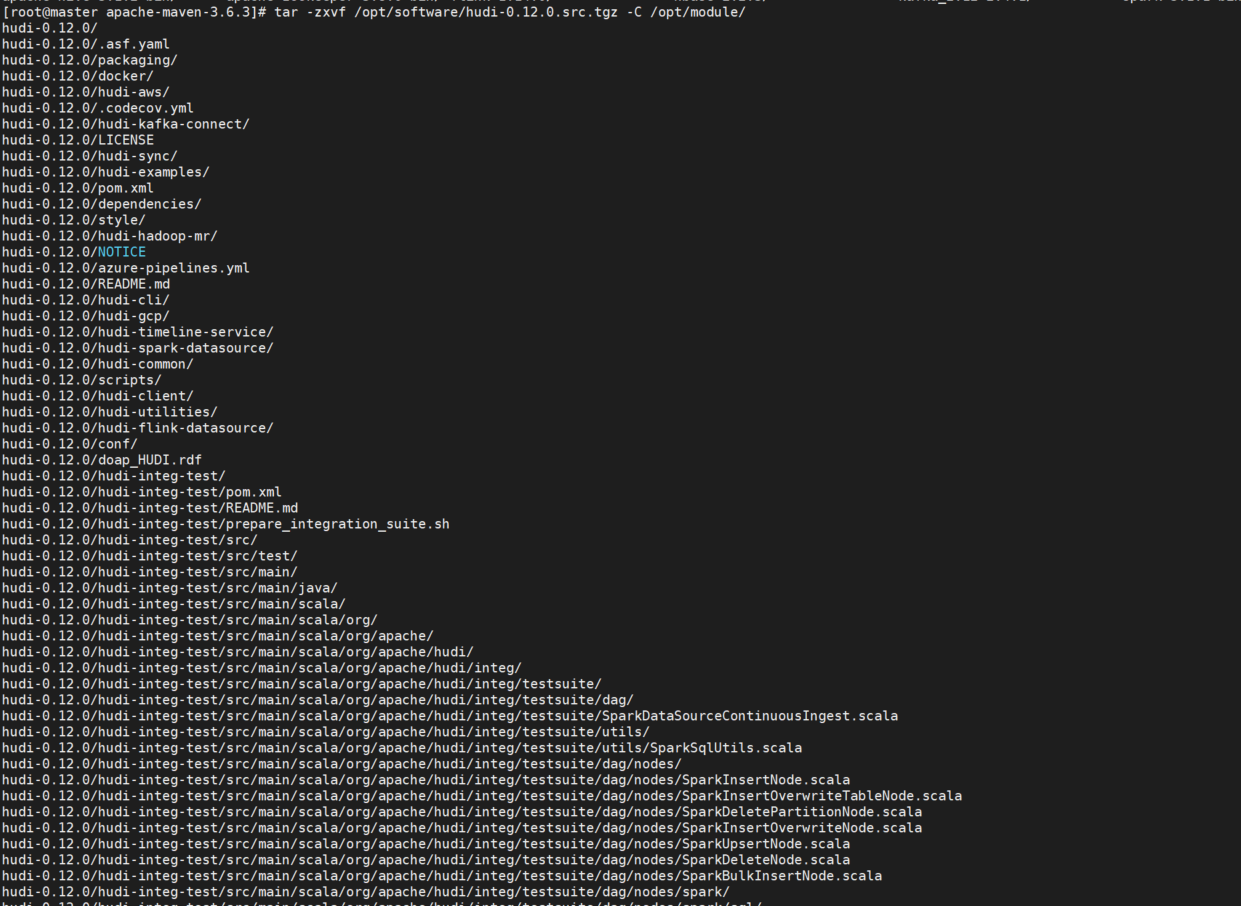

解压

tar -zxvf /opt/software/hudi-0.12.0.src.tgz -C /opt/module/

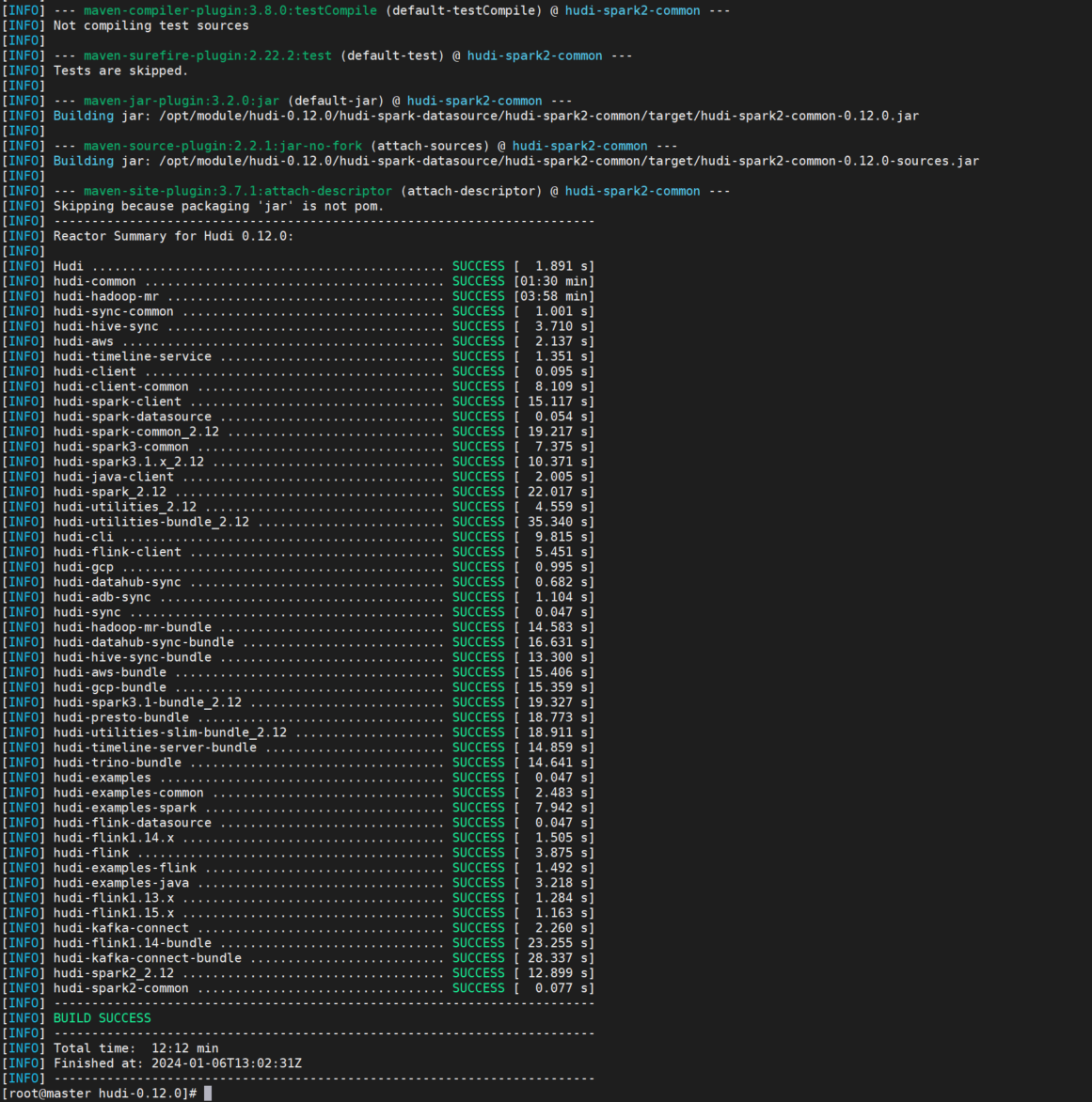

编译

mvn clean package -DskipTests -Dmaven.test.skip=true -Dspark3.1 -Dscala-2.12

编译完成后将spark jar包复制出来

cp /opt/module/hudi-0.12.0/packaging/hudi-spark-bundle/target/hudi-spark3.1-bundle_2.12-0.12.0.jar /opt/module/spark-3.1.1-bin-hadoop3.2/jars/运行

在 spark-shell 运行案例

spark-shell --jars /opt/module/hudi-spark3.1-bundle_2.12-0.12.0.jar --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer' --conf 'spark.sql.extensions=org.apache.spark.sql.hudi.HoodieSparkSessionExtension'在里面粘贴代码

import org.apache.hudi.QuickstartUtils._

import scala.collection.JavaConversions._

import org.apache.spark.sql.SaveMode._

import org.apache.hudi.DataSourceReadOptions._

import org.apache.hudi.DataSourceWriteOptions._

import org.apache.hudi.config.HoodieWriteConfig._

import org.apache.hudi.common.model.HoodieRecord

val tableName = "hudi_trips_cow"

val basePath = "file:///tmp/hudi_trips_cow"

val dataGen = new DataGenerator

val inserts = convertToStringList(dataGen.generateInserts(10))

val df = spark.read.json(spark.sparkContext.parallelize(inserts, 2))

df.write.format("hudi").

options(getQuickstartWriteConfigs).

option(PRECOMBINE_FIELD_OPT_KEY, "ts").

option(RECORDKEY_FIELD_OPT_KEY, "uuid").

option(PARTITIONPATH_FIELD_OPT_KEY, "partitionpath").

option(TABLE_NAME, tableName).

mode(Overwrite).

save(basePath)

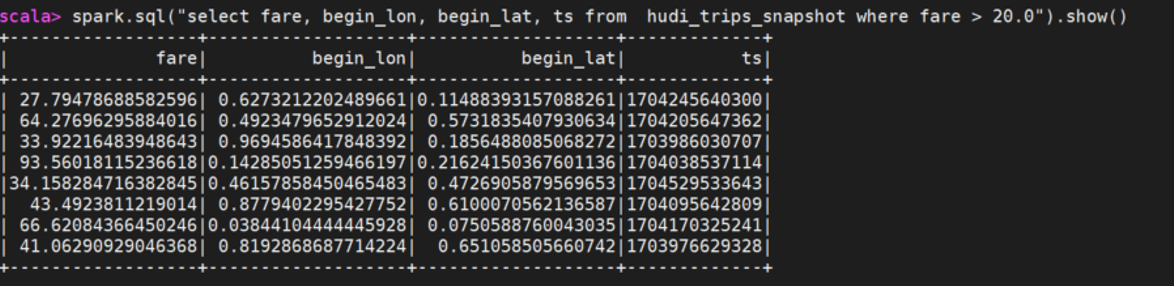

val tripsSnapshotDF = spark.read.format("hudi").load(basePath + "/*/*/*/*")

tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

spark.sql("select fare, begin_lon, begin_lat, ts from hudi_trips_snapshot where fare > 20.0").show()

评论 (0)